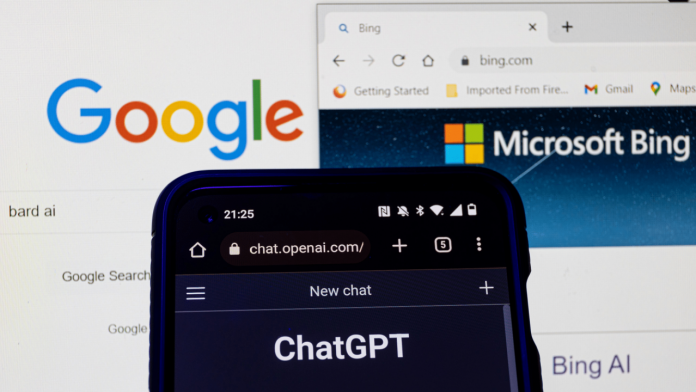

ChatGPT made a splash when it launched last year by providing an AI engine to the masses free of charge. With ChatGPT, users can input queries and receive human-like responses within seconds, from composing essays on the First Crusade to crafting poems about Al Gore’s affinity for Toyota Prii. Unlike traditional search engines that return a list of website links that match a user’s query, ChatGPT scours vast data sets and uses a large language model to generate sentences that closely mimic human responses. Some have likened it to a souped-up version of autocorrect.

ChatGPT quickly gained popularity, amassing an estimated 100 million active users by January and becoming the fastest-growing web platform ever. This success prompted both Microsoft and Google to integrate AI into their search engines, with Microsoft’s Bing incorporating GPT technology licensed from OpenAI and seeing a 16% increase in traffic. Other products, such as Microsoft Word, Excel, and PowerPoint, as well as Google Workspace tools like Gmail and Docs, have also implemented various forms of generative AI. Snapchat, Grammarly, and WhatsApp have also jumped on the AI bandwagon.

However, not all AI chatbots are created equal. In the tests below, we compared responses from the free version of ChatGPT, which uses GPT-3.5, to responses from the paid version of ChatGPT, which utilizes GPT-4, as well as Bing’s version of ChatGPT and Google’s Bard AI system. It is worth noting that GPT stands for “generative pretrained transformer,” while Bard is currently in an invite-only beta phase, and Bing is free but requires users to employ Microsoft’s Edge web browser.